Since streaming starts on the iPhone, “spatial” sound effects could at least be applied to streamed audio sent to the HA’s. Since the MFi transmission protocol to HA’s is undoubtedly not Dolby Atmos nor DTS, iOS might use its knowledge of the listener’s audio space to translate what’s streamed to the HA’s to imitate DTS. The other thing is since smartphone apps can adjust the “fit” of the HA’s, Apple’s knowledge of the HA user’s personal audio space (trying to imitate the lingo you referred to in the Earbuds Pro 2.0 article) could always be handed over to the smartphone HA app to apply that “learning” to how the HA’s reproduce sound heard directly through the HA microphones. My meager knowledge of spatial sound as reproduced by DTS is a lot of it depends in timing delays between ears. So maybe personal fit information could be integrated along with the average pinna effect already built into HA programming for BTE microphones to improve the spatial perception of audio.

Maybe the answer to this is known. But I wonder how we learn directionality if we all have differently shaped pinnas? Perhaps we hear a noise, and we see where it came from in the same instant or by turning around, see its source, starting out as infants and maybe continuing to learn on into life what sounds sound like coming from different directions and distances. Perhaps, the idea is just to restore more of our own unique pinna effect to make sound “directionality” more like what we learned when we were young (and had a lot better hearing!).

Edit_Update: Bonus Materials!

Wikipedia has an extensive article on how humans localize sound. Both time delays between ears and the relative level of a sound heard in each ear are important:

Sound localization - Wikipedia

I liked the discussion of “The Cone of Confusion…!”

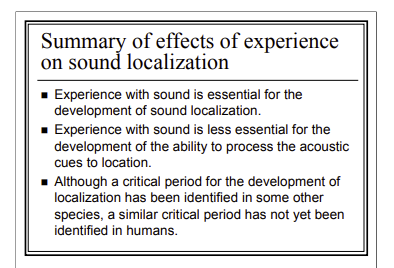

Two interesting articles: One an extensive presentation on the development of sound localization in young animals. The last slide in the article concludes that exposure to sound is necessary for the development of sound localization but given exposure, the ability to localize sound itself is not driven by sound experience but is (apparently) innate:

Source: https://faculty.washington.edu/lawerner/sphsc462/dev_loc.pdf

Another paper studied the development of sound localization in early-blind people and found that their ability to localize sound was better than sighted people, implying that training by visual cues does not play a critical role in sound localization ability.

Early-blind human subjects localize sound sources better than sighted subjects

Source: https://www.nature.com/articles/26228